Learning Teachable Machine is one of my learning goal that I want to develop this semester 2, and I want to create and integrate a machine learning model into a cyber-physical system that can be useful and also be comfortable to use the TensorFlow to apply the machine learning model. For our CPS Project – Critical Infrastructure group assignment, we use machine learning to recognise the different brand/model of mobile phones using Teachable Machine. Teachable machine is a free web-based tool that makes creating machine learning models fast, easy, and accessible to everyone. Teachable Machine is flexible because we can use examples from files or capture examples live. For our group, we decided to capture the images live because we have the different brand/model of mobile phone samples.

The Components

- Laptop with built in webcam

- Samples of images or the objects that we want to train, for our group project is mobile phone.

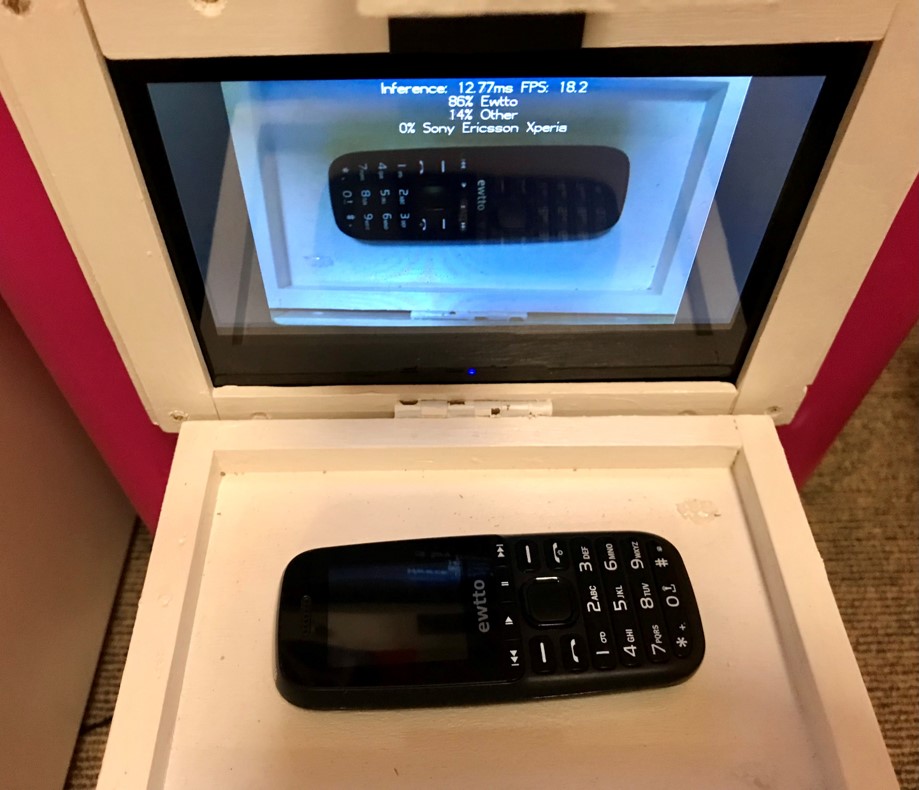

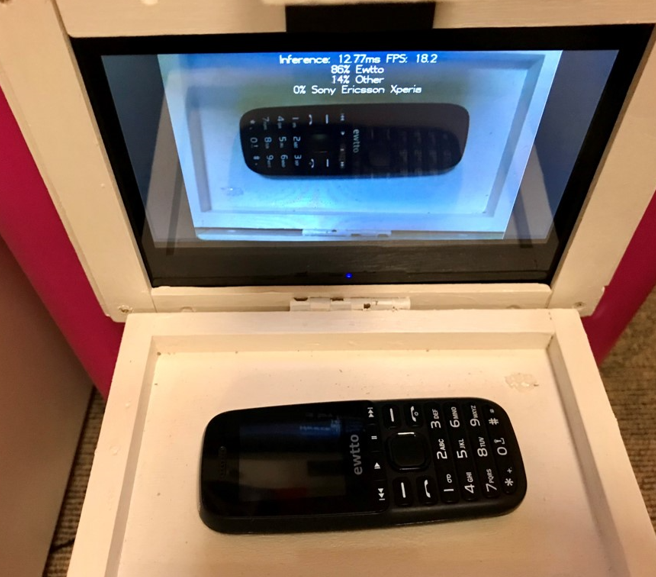

- Initially we started with 5 mobile phones, but for the Demo day we used only 3 mobile phones because I have them with me in Sydney, the other 2 mobile phones were in Canberra. Here are the old mobile phones that we managed to collect from our friends and family:

- Ewtto (from Matthew Phillips)

- Nokia 3310 (Julian)

- iPhone 5C (Myrna)

- Sony Ericsson Xperia (Myrna)

- Samsung Galaxy S2 (Julian)

- Other

- Raspberry Pi

- Pi Camera and the cable to connect to Raspberry Pi

- Coral USB Accelerator

The Process

Using Teachable Machine for the first time is really easy and fun, from capturing images, training the model and testing it on your laptop. But to do this in Raspberry Pi, it needs Pi Camera and a bit more steps, especially to replicate the image recognition capability. The link for SMARC Teachable Machine model.

Using Tensorflow.js – I used JavaScript on the first attempt because I didn’t have the Coral USB Accelerator to use TensorFlow Lite at the beginning.

- Gather and group the mobile phone examples that I want the computer to learn, into 6 classes/categories (~1000 images for each class/category).

- Train the model, and then test it out to see whether the model can correctly classify new examples.

- Export the model to use it for SMARC project in Tensorflow.js format by upload (shareable link) and then copy the shareable link.

- Open the p5js editor webpage: https://editor.p5js.org/codingtrain/sketches/PoZXqbu4v

- Using the sample codes provided by Daniel Shiffman from codingtrain for Teachable Machine 1: Image Classification, I modified the codes to classify the mobile phones that were already trained for SMARC in Teachable Machine.

- To make the classification more interesting, I use emoji from emojipedia:

- 😀 as the default when scanning

- 📱 for classified mobile phones

- 📵 for other (not mobile phone)

- The final codes can be found here: SMARC p5.js codes

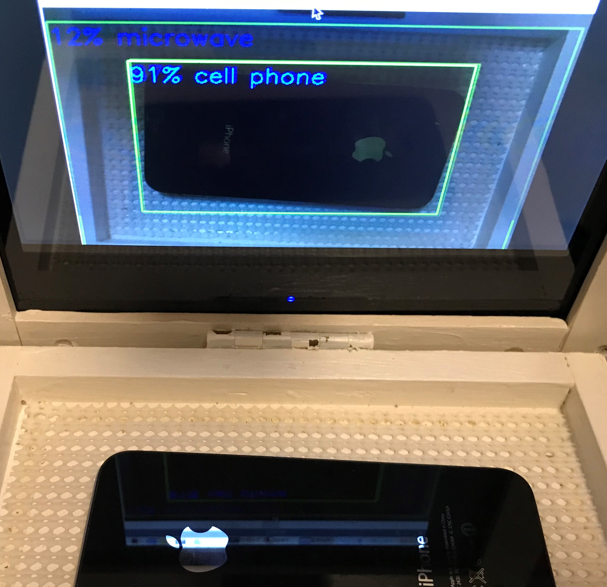

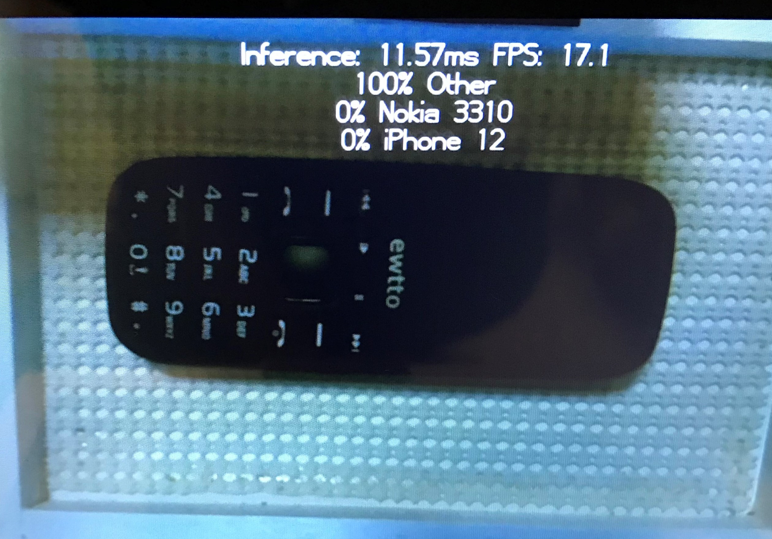

Using Tensorflow Lite – second attempt I used Coral USB Accelerator

- Select Edge TPU for model conversion type.

- Download my model.

- Unzip the files in converted_edgetpu.zip folder, which contains 2 files: model_edgetpu.tflite and labels.txt

- The code snippets to use your model for Coral on Teachable Machine didn’t work, so follow this instruction to set up the Coral USB Accelerator instead.

- Then follow the instruction to set up the Pi Camera and Coral USB Accelerator here and to test it follow this instruction next.

- Rename the file model_edgetpu.tflite to smarc_edgetpu.tflite and labels.txt to smarc_labels.txt.

- Copy and save these 2 files from your laptop into your Raspberry Pi under the following path: /home/pi/examples-camera/all_models folder

- Modify the sample code from classify_capture.py in raspicam folder and rename it to SMARC_Final_Program.py and save it in Raspberry Pi under the following path: /home/pi/examples-camera/raspicam folder.

- Run the SMARC_Final_Program.py with any programming codes (VS Studio, Mu Editor, Thonny, etc.)

Reflections

To help with prototyping SMARC that demand fast on-device inferencing for machine learning models, we need to use an Edge TPU as a coprocessor for the computer such as Coral Dev Board or Coral USB Accelerator. I never used any of these Corals before and started with the Coral Dev Board because that’s what I received from Mina, and I followed the instruction to set up the Dev Board here. However, I couldn’t get this to work either and stuck with the “Flash the board” step. So I asked for the alternative Coral USB Accelerator. Unfortunately, none of the Coral USB Accelerators is available from the 3Ai studio. However, two of our cohort members had the Coral USB Accelerator from their Maker Project last semester, Adrian and Chloe, but I couldn’t get theirs earlier because Chloe’s team needed to use it for their CPS project, and Adrian also needed it to test the model in Adelaide. In the end, Chloe’s team decided not to use the Coral USB Accelerator, and I was able to borrow it a couple of days before the Demo Day when I finally made it back to Canberra again after 5 months stuck in Sydney lockdown.

Our group tried to work collaboratively across 3 different cities/states and trained the Teachable Machine model using different mobile phones from Sydney (Myrna) and Canberra (Julian). Then the codes to test was built in Adelaide (Adrian) using different mobile phones as well. Unsurprisingly, when I ran the Raspberry Pi code, there was some error where many of the mobile phones were recognised as “other”. I wish I had the Coral USB Accelerator earlier so that I could test the model earlier too. But unfortunately, I didn’t have it and the codes from Adrian too, until a couple of days before the Demo Day.

Of course, the challenge is not over yet, I have some issues with the codes, and I tried to debug it to find the missing link or error that caused the codes to fail to recognise the mobile phone type or take to the right page on Marvel App. I found that the model path has a different link, so I updated the codes with the correct link, and it works, but it showed the wrong page on Marvel for the type of mobile phone. So I had to go back and check the link for the correct page in Marvel App and update the codes accordingly. All these changes happened on the last day, even until the morning of Demo Day. This quick turnaround time for testing and debugging was quite stressful for me, especially when I’ve been asking for this Coral USB Accelerator since the beginning of Semester 2.

I was thinking of an alternative way to apply this Teachable Machine model without the Coral USB Accelerator using Tensorflow.js and p5.js. I’ve never used JavaScript programming before, so this is a challenge for me. Thanks to Memunat, who recommended the p5.js training on YouTube, I managed to apply it and display it with some emojis. And then Matt also kindly offered his help to build the interface website using the p5.js code that I modified for SMARC. Thank you so much Matt, for showing me how to use localhost and teaching me more about JavaScript.

Integrating the Teachable Machine with the Marvel App is another skill that I learned this semester. Marvel App is a prototype website for the user interface that our group use to showcase SMARC. I used this Marvel App as the interface because I wasn’t sure if I could get the Teachable Machine model to work on the Raspberry Pi. So I thought it would be great to display if I could create a mock-up interface to show the concept and idea of how this could work in the future. I was so worried that we wouldn’t have a working prototype On the Demo Day, given my challenges with getting the Coral USB Accelerator.

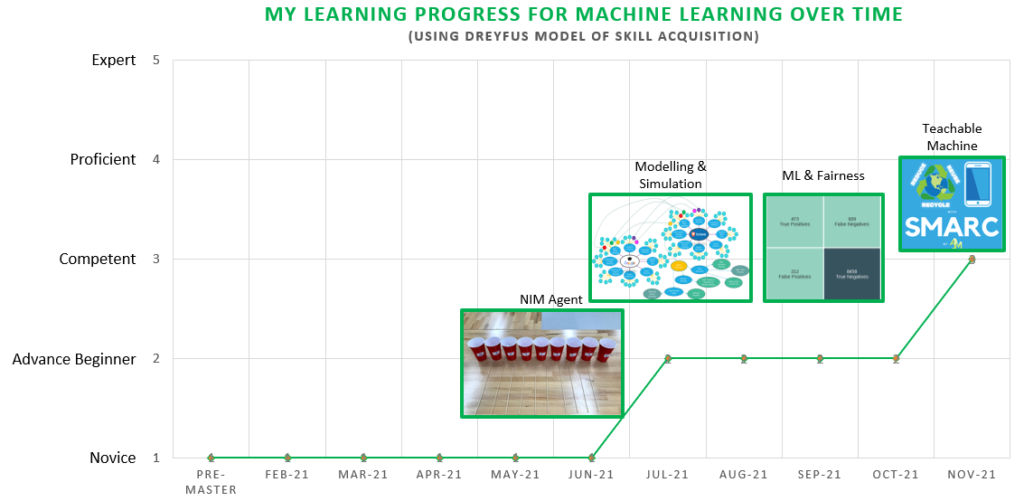

Overall, based on the Dreyfus Model of Skill Acquisition, and after going through the process and challenges that I had for CPS Project in building SMARC using Teachable Machine, I would categorise my current skill of Machine Learning from Beginner level at the end of Semester 1 to Competent level now. Although it’s challenging, stressful and frustrating, I’m grateful to experience all these rollercoaster learning journeys. It has forced me to have a steeper learning curve, and I would like to keep learning and developing my skill in Machine learning further to be at the Proficient level after the Master’s program finishes. Hopefully, I can apply this skill when I’m back to work next year.

Acknowledgement

- Chloe for lending me her Coral USB Accelerator from her Maker Project in Semester 1.

- Memunat who introduced me to p5.js codingtrain YouTube page.

- Matt for helping me with JavaScript codes to build the interface website for SMARC to scan the mobile phones.

- Matthew Phillips who helped me troubleshooting the Coral Dev Board and lending his old Ewtto mobile phone.

- Xuanying Zhu for sending some photos of her old Samsung mobile phone.